In its broadest sense, genetics is the science of biological variation. It focuses on variation that results from inheritance, the process by which characters are passed from parents to their offspring. This often places genetics in contrast to the environment, even though one is not sufficient without the other. For thousands of years, humans have been interested in how characters are inherited in plants, animals, and themselves. Much was learned over the centuries through observations and repetition of what seemed to work in agriculture and animal breeding. But it took an Austrian monk working in the middle of the 19th century to conceptualize what was happening when characters were passed on. The monk, of course, was Gregor Mendel, and we are now more than a century and a half into the discipline he fathered.

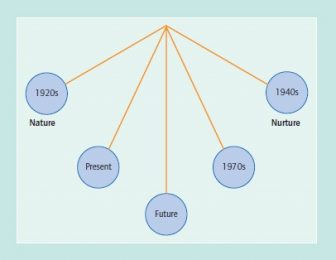

The history of genetics has been colored by the best and the worst of times. The field has suffered through periods of extreme controversy. Its practitioners have varied from those whose ideas could not help but be controversial given the views of the society in which they emerged, to those who quietly worked in their laboratories to develop the theories and technologies to drive the engine of genetics. Both scientific and societal concerns have driven the swings of the so-called nature-nurture pendulum (see figure). Nature versus nurture refers to the influences of biology, that is, genetics, versus the environment, in the determination of characters, be they physical or behavioral. The swings of the pendulum reflect periods of time during which the scientific community and society viewed either nature or nurture as the more important determinant. These swings have clearly influenced developments in genetics and will be highlighted as we trace the history of this science.

The following history of genetics covers first its roots in the 19th century. The next period, 1900 to 1953,

spans from the rediscovery and promotion of Mendel’s seminal work to the discovery of the basic structure of deoxyribonucleic acid (DNA) in 1953 and the beginnings of molecular genetics. Next, a special section addresses the eugenics movement that began in the 19th century and slowly disintegrated in the aftermath of World War II. Finally, from the 1950s to the present day, the field of molecular genetics developed and tremendously influenced the way all of genetics is practiced, leading to the current era of genomics.

Swings of the nature-nurture pendulum.

Swings of the nature-nurture pendulum.

The 19th Century: Roots of Genetics

The names of three individuals dominate the 19th century relative to the roots of genetics: Charles Darwin, Gregor Mendel, and Francis Galton. In 1859, Charles Darwin was the first to present scientific evidence for what he called natural selection in his book On the Origin of Species. Met with skepticism, Darwin’s theory profoundly influenced the ideas of scientists and geneticists of future generations, perhaps having more impact than any other naturalist on the course of genetics and society. However, Darwin never produced a satisfactory explanation for the genetic source of variation in characters that he understood to be the target of selection.

Only 6 years after Darwin’s publication, Mendel published his papers regarding inheritance of characters in peas, which seems to have been virtually ignored by scientists of his time. His work was so advanced that it wasn’t until its rediscovery in 1900 by three scientists that the results were finally understood. Between Mendel’s initial publications during the 1860s and the rediscovery of his work, the third individual began his work.

Francis Galton, a cousin of Charles Darwin, and just as much a naturalist as Darwin, became the pioneer for the promotion of eugenics, a term that he coined, the direct meaning of which is “wellborn.” In 1865, Galton opined that if as much effort and cost went toward improving biological variation in humans as was spent on breeding improvements in farm animals, we could surely improve humankind’s cognitive abilities. Galton thought that biological inheritance of leadership What’s new? qualities determined the social status of Britain’s ruling classes, seemingly a logical extension of Darwin’s theory of evolution. However, whereas Darwin never discussed the nonscientific impact of his ideas in the face of society, Galton advocated them. Furthering Galton’s naturalistic view of the world, the Englishman Herbert Spencer introduced the concept of Social Darwinism. He drew analogies between society and biological organisms in a book that sold more than 400,000 copies between 1860 and 1903. Using the principle of evolution as a universal law and considering competition as the key to evolutionary progress, Spencer ignited a society that wholeheartedly believed that government welfare interfered with the evolutionary process by favoring survival of the unfit. At the time, however, there was a lack of scientific knowledge about how the inheritance of specific traits occurred, and without the immediate results to confirm Spencer’s and Galton’s ideas, the public essentially lost interest. This was all about to change with the rediscovery of Mendel’s experiments and the explanation of how characters were passed from parent to offspring.

1900-1953: Classical Genetics

Many pioneers in biology, only some of whom will be highlighted here, led the early work in genetics. At the turn of the century, research in genetics had greatly accelerated, and explanations for the transmission of traits were being found through the rediscovery of Mendel’s work. While Hugo de Vries and Erich von Tschermak were initially given credit as having independently rediscovered Mendel’s work, their publications are now judged to have been vague. Some think they might have misunderstood Mendel’s concepts. It was a third scientist, Carl Correns, who was able to demonstrate clearly how Mendelian ratios were derived theoretically. He indicated that discrete units, known eventually as genes, somehow factored into causing the traits observed in offspring. This exciting rediscovery of Mendel’s work fueled the younger generation of geneticists because Mendel’s laws served as a foundation for predicting the transmission of characters from parents to offspring.

As a consequence of the interest in and promotion of Mendel’s ideas after the turn of the 20th century, two opposing scientific groups developed. One saw Mendel’s ideas as providing the prime basis of explanation for the inheritance of characters and came to be known as the Mendelists. The other, known as biometricians, had its origins in the work of Galton on metric traits such as height and did not think that Mendelian principles offered the models to explain the inheritance of these traits. William Bateson led the Mendelists, while the statistician Karl Pearson and Walter F. R. Weldon led the biometricians. Ultimately, most geneticists of the time would reconcile the differences between the Mendelians and the biometricians. The culmination came in 1918, when Ronald A. Fisher published his classic paper showing that characters controlled by large numbers of Mendelian factors (genes) would show the type of continuous, quantitative distribution (normal bell-shaped curve) and correlations between family members like parents and offspring that Galton and later the biometricians described. This publication marks the beginnings of classical quantitative genetics, the analysis of the genetics of metric characters such as height, head length, and the like.

In the early 1900s, population genetics, the study of how genes are distributed and maintained in populations, had its beginning. In 1908, G. H. Hardy published a paper in the journal Science regarding the application of mathematical methods to biology. Hardy explained how the frequencies of genes in populations would remain the same from one generation to the next unless certain forces, now known as the mechanisms of evolutionary change, were operable in the population. That same year, Wilhelm Weinberg, publishing in German, stated the same principles as Hardy.

Gregor Mendel

Starting in the second decade of the 20th century, the mathematical basis and theories of population genetics were further developed by three individuals. Fisher has already been mentioned. Between 1922 and 1929, he developed a theory of evolution in which he combined Mendelian inheritance and Darwin’s natural selection. During this period, he published several papers that led to the eventual culmination of his ideas in the book, The Genetical Theory of Natural Selection, in 1930. About the same time as Fisher, an English biologist, J. B. S. Haldane, was beginning his career. A paper published in 1915 by Haldane was the first to show genetic linkage in mammals. After returning to England from World War I, Haldane continued his research in population genetics. His publications from 1924 through 1932, and lectures presented in 1931, titled “A Re-Examination of Darwinism,” led to the book The Causes of Evolution in 1932.

Sewall Wright, an American, was the third in the group of major contributors to population genetics. He was an early user of the principles of Hardy and Weinberg. He is probably best known today for having stressed the importance of random genetic drift as an explanation for changes in gene frequencies in small populations. Wright lived well into his 90s and contributed immensely to the theoretical development of population genetics for more than 70 years, an extraordinary accomplishment. Fisher, Haldane, and Wright were essential to the vitality of population genetics from the 1920s and well beyond. They were conscientious enough to consider with an open mind not only evolutionary genetics but also the effects of environment on heredity. This was vastly different from the scientists who promulgated eugenics, who viewed the effects of heredity as primary.

Another major force in the development of genetics during the 1920s was Hermann J. Muller. His paper on “Artificial Transmutation of a Gene” was published in 1927 in Science. Earlier, Muller had worked in the lab of Thomas Hunt Morgan at Columbia University. Morgan and his students and coworkers originated the use of the fruit fly Drosophila in research to study the mechanisms of inheritance. In 1915, Morgan’s research group published the important text, The Mechanism of Mendelian Heredity. After leaving Columbia in 1920 for the University of Texas, Muller became interested in the mutagenic effects of X-rays. His subsequent experiments leading to the paper in 1927 were the first time any laboratory had been able to cause a permanent, heritable change in the genes of an organism. Muller’s work convinced him that almost all mutations are deleterious.

For many years, Mendelian genetics and Darwinian evolution were viewed by the geneticists of the day as two disparate entities. Fisher, Haldane, and Wright were the first to alter this thinking. However, their work was heavily mathematical and often difficult for biologists and others to comprehend. Into this situation came the Russian-born naturalist and geneticist, Theodosius Dobzhansky, and his book Genetics and the Origin of Species, in 1937. This was the first blow to be struck for the Evolutionary Synthesis, the melding of Darwinian and Mendelian thought with its application and testing in the field, the natural laboratories of population genetics and evolution.

Other architects of the Evolutionary Synthesis continued this work. Several publications, directly inspired by Dobzhansky’s book, appeared in the 1940s. Ernst Mayr’s book Systematics and the Origin of Species (1942) focused more specifically on systematics and the problem of speciation, a concept poorly understood by many biologists of the time. George Gaylord Simpson’s Tempo and Mode in Evolution (1944) tied in evolutionary change from a paleontological viewpoint. Finally, G. L. Stebbins’s Variation and Evolution in Plants (1950) broadened the synthesis to include botanical studies and plant genetics. These works together helped unify and strengthen Dobzhansky’s original thesis and provided the basis for further developments in the Evolutionary Synthesis that impacted many fields of study, including biological anthropology and the study of human evolution.

Although the developing eugenics movement, discussed in the next section, applied genetics to human variation, it was not the only means by which human genetics began to develop. Some of the earliest attempts to apply Mendelian principles to human characters occurred in the early 1900s. An example of this was the description by the English physician Archibald Garrod of the first metabolic disease in humans, alkaptonuria, as being inherited in an autosomal recessive manner, meaning that both parents were carriers of the mutation for this disease and would pass it on with a one-quarter chance of transmission with each child.

Human genetics was already developing as a field during the first three decades of the 20th century, but it was not until the 1930s that it began to shed the baggage of eugenics. Garrod continued to form the basis for human biochemical genetics with his book The Inborn Factors in Disease in 1931. Three years later, Asbjorn Folling described phenylketonuria (PKU), one of the classic metabolic diseases. The first study of human identical twins, by Horatio H. Newman and his coworkers, was published in 1932. The same year Haldane made the first estimations for the mutation rate of a human disease gene, that for hemophilia. Fisher was also applying population genetics principles to human genetics with the publication in 1934 of a major work on ascertainment and linkage studies in human pedigrees. 1935 marked the publication of the book Principles of Heredity by Laurence H. Snyder, who was at the same time establishing a research program for human genetics at Ohio State University.

Beginning in the 1930s and continuing throughout the 1940s, there were signs that genetics would develop into a biochemistry-based and eventually molecular-based science. Muller’s work on X-ray mutagenesis had stimulated a search for the chemical basis of mutation and at least two lines of research. The first began in an interesting fashion and illustrates that genetics, indeed any science, has sometimes relied on luck for advancement. This research was to broaden understanding of gene function and was contributed by Charlotte Auerbach and her colleagues. Auerbach, born a German Jew, left the increasingly threatening situation in Germany in 1933. She soon held a position in genetics research at the Institute of Animal Genetics in Edinburgh, Scotland. Muller visited the Institute in 1938 and is credited with suggesting research on chemical mutagenesis to Auerbach before his departure to America.

Auerbach and her coworkers began to study the effect of mustard gas on the mutation rate of the fruit fly Drosophila melanogaster in 1940. They hoped to find a way to perform a directed mutation to enable studies of the nature of the gene. Mustard gas induced similar mutations to X-rays but produced more lethal mutations. The anticipated directed mutation did not materialize. Because of World War II, the results were not published until 1946. The delay did not dull the significance of the work. Further discoveries of other chemical mutagens, stemming from Auerbach’s work, showed the desired directed mutation effect and proved to be invaluable to genetic research.

In the second direction taken from Muller’s work, George Beadle and Edward Tatum in 1941 used Neurospora conidia treated with X-rays to revolutionize the way that geneticists searched for metabolic mutants. After treatment with ionizing radiation, these researchers grew each spore in two different media. The first, the complete media, contained everything a cell would need for optimal growth; the second, the minimal media, provided only the barest essentials for cellular survival. Once a spore was found that could survive on complete but not on minimal media, progressive metabolic precursors could be added to the minimal media to determine exactly what metabolic pathway had been disrupted at what point. Their findings seemed to show that one gene is responsible for the production of one, and only one, enzyme. Beadle and Tatum proposed the “one gene, one enzyme hypothesis,” the first biochemical explanation for the function of the gene. Beadle and Tatum contributed two additional innovations. The first was the establishment of Neurospora as a microorganism ideally suited for genetic studies. This was crucial groundwork toward eventually making bacteria genetically useful in the laboratory. The most important innovation was the linking of the genes of the organism with its biochemistry, essentially establishing molecular genetics, or biochemical genetics, a term that Beadle preferred.

Another research discovery of the 1940s found its origins in a classic 1928 paper in which Fred Griffith reported that serological types of the bacteria pneumococcus were interchangeable. Specifically, it was shown that rough-coated pneumococci could be permanently transformed into the more virulent smooth-coated cocci capable of causing lethal infections. At the time that study was published, the laboratory directed by Oswald T. Avery in the Rockefeller Hospital of New York City had already been working with pneumococcus for over 15 years. Given his familiarity with the microorganism, Avery became convinced that the phenomena described by Griffith held the key to how pneumococci controlled the formation of the bacterial capsule.

Determining the nature of this key to pneumococcal virulence would take an additional 16 years, before Avery, Colin M. MacLeod, and Maclyn McCarty published their classic 1944 paper, “Studies on the Chemical Nature of the Substance Inducing Transformation of Pneumococcal Types,” in the Journal of Experimental Medicine. In this meticulous, carefully analyzed study, the authors discussed the revolutionary evidence that DNA is the transforming principle that influences the construction of the capsule of the pneumococcus in a heritable way. The significance of this work was the idea that it is DNA, not nucleoprotein, that is the conveyor of inheritance in living things. It was truly a major shot toward initiating the DNA revolution.

The Eugenics Movement

Eugenics represents a special case in the history of genetics, as its advocates and practitioners had such a profound influence on genetics during its development as a science. The eugenics movement spanned the period from the late 19th century until well into the 20th century. As mentioned, it was Galton who coined the term eugenics in 1883 as the process of improving the human race through selective breeding. The idea of saving humankind from a perceived genetic and moral decline hit a very popular chord in nations such as England, the United States, and Germany. Indeed, over 30 countries worldwide enthusiastically embraced eugenics, each adopting the movement to fit its own local scientific, cultural, and political climate. It was supported in countries such as Argentina, Cuba, Czechoslovakia, Russia, Brazil, Colombia, Mexico, and Venezuela. Eugenics was seen by many cultures as the truest method to cleanse human society from what were then perceived as hereditary degenerations, such as moral decadence, tuberculosis, chronic unemployment, alcoholism, and a wide variety of social ills.

Scientifically, eugenicists mistakenly believed that most traits, even ones we would describe today as complex traits with multiple loci, were governed by only one gene. With that being the case, they reasoned, the improvement of human populations would be a matter of controlling which Mendelian “factors” or genes were passed on to successive generations through aggressive selective breeding, to which livestock had been subjected for centuries. According to their thinking, the selective breeding of humans included not only encouragement of larger families within the middle- and upper classes of society in order to propagate their genes of material success and civility but also measures to prevent the reproduction of those individuals deemed unworthy, unfit, or a burden on society. Their solution was enforced sterilization by vasectomy or tubal ligation to people in asylums and convicted criminals. They pushed the idea of sterilization so that those who were sterilized could participate in society without the need for institutionalization, thus deferring the cost to the public.

In America, support for eugenics first became evident around the time of the heated debates between the Mendelians and biometricians. In 1904, Charles Benedict Davenport talked the Carnegie Institute of Washington into funding the creation of the Station for Experimental Evolution at Cold Spring Harbor in Long Island, New York, and in 1906, John Harvey Kellogg created the Race Betterment Foundation in Battle Creek, Michigan. Davenport was to play a major role in the development of eugenics in America.

Davenport, a well-respected scientist, had earned his undergraduate degree in civil engineering and received his PhD in biology in 1892. After short stints teaching at Harvard and the University of Chicago, Davenport arrived at Cold Spring Harbor. While investigating nonhuman breeding and inheritance, Davenport’s lab contributed significantly to basic knowledge of genetic variation, hybridization, and natural selection in animals. However, sometime around 1910, his attention was drawn more and more to human genetics.

Davenport had trained in statistical methods under Galton himself during his graduate studies. This exposure as well as the results of his animal studies set in motion a lifelong devotion to eugenic ideals. Davenport was also an enthusiast of Mendel’s work and applied these principles in humans. He became convinced that through human pedigree analysis, he would be able to predict in accordance with Mendelian principles the occurrence of such traits as feeble-mindedness or criminality as easily as hemophilia or six fingers.

In a classic example of well-intentioned but misinformed science, Davenport established the Eugenics Record Office (ERO) at Cold Spring Harbor with private funding, with himself as director. Harry H. Laughlin was appointed superintendent to take care of administrative affairs. Under Davenport and Laughlin, the ERO collected data on the inheritance of human traits, analyzed census data and population trends, encouraged courses in eugenic principles, and distributed eugenics materials in the United States and Europe.

The popularization of eugenics with the American people now began in earnest. Between 1910 and 1916, articles in periodicals written for the average citizen, such as Good Housekeeping and The Saturday Evening Post, increased tenfold. Books targeted for public consumption included such works as The Passing of the Great Race by Madison Grant (1916) and The Rising Tide of Color Against White World Supremacy by Lothrop Stoddard (1920). In 1917, The Black Stork, a decidedly prosterilization film, was released for American moviegoers. State fairs entertained and educated Americans through the use of eugenic displays and “Fitter Family” Contests, the first being held at the Kansas Free Fair in 1920. By 1930, eugenics was taught in many high schools. In American colleges and universities, over 350 eugenics courses were offered. Church-going citizens often heard the virtues of eugenics extolled from church pulpits. These efforts succeeded in widespread acceptance of eugenics.

Political action soon followed. As early as 1907, Indiana became the first state to pass an involuntary sterilization law, targeting individuals in mental institutions, criminals involved in more than one sex crime, and those determined to be feeble-minded via IQ tests. By 1935, 26 states had similar laws on the books, and 10 more states were poised to enact such legislation, testifying to the acceptance and popularity of eugenics in American culture. Before the repeal of the last eugenics laws (California, 1979), over 64,000 individuals had been involuntarily sterilized.

The second major arena of eugenics political action concerned immigration. The Johnson Immigration Act of 1924 (Immigration Restriction Act) was the most far-reaching and restrictive immigration act in America to that time. Congress, convinced by the limited evidence presented by Laughlin, voted for severely restricted immigration from “undesirable” nations in Eastern Europe and other countries. According to the evidence, immigrants from such areas possessed lower intelligence than Americans (determined by English IQ tests given to non-English-or limited-English-speaking immigrants) and a much higher preponderance of negative traits such as alcoholism and insubordination, viewed as heritable traits. Obviously, Congress thought, American society needed protection from such negative effects.

The scientific community did not blindly accept the claims of eugenics. Opposition to the movement grew steadily. By the 1920s, geneticists understood that the traits emphasized by the eugenicists were the result of complex interactions between multiple genes and that no such trait could be attributed to a single gene. Still other research showed that environmental factors greatly influenced the expression of genes. Research also demonstrated mathematically that it would be virtually impossible to eliminate a recessive gene in a population.

Another factor of significance in contributing to America’s waning support for eugenics was the Great Depression. This economic calamity turned American society upside down. Suddenly, people in the upper classes of society found themselves with no money and no jobs. More important, they were thoroughly confused. People at this societal level had been taught by eugenics that their genetic traits were virtual guarantees of material success and employment. Finally able to understand that social and economic variables affected social position more than any genetic explanation could, society began to abandon eugenics ideas. When Hitler and the Nazi party came to power in 1933, parallels and connections in eugenics between the United States and Germany were obvious. The embarrassment that America could be associated with the ideals of the Nazi Party resulted in drastic changes to the concept of eugenics in America, to the point that the ERO, which already had changed its name to the Genetics Record Office, closed in 1939.

The rise of eugenics in Germany, viewed by many as the ultimate expression of eugenics, actually paralleled this movement in America. It was introduced in Germany late in the 19th century by physicians and was viewed as the last, best hope for the improvement of the German people, with the focus on increasing national efficiency. Eugenicists in Germany were enthusiastic and supportive of work by Davenport and Laughlin and had read Grant’s and Stoddard’s books. The progress of eugenics in America in the 1920s fueled Germany’s developing optimism that racial hygiene would prevent the total degradation of truly Germanic ideals.

Germany following World War I was a country left feeling hemmed in by enemies and alone in the global community. Early eugenic theories left many Germans, including well-known geneticists, worried that if Germany were not allowed to grow, her “best and brightest” would be forced to migrate from the country, taking their racially pure genes with them and leaving defective ones behind. With the passage of the Johnson Act and sterilization laws, the United States was sending the message to other countries that discrimination and racism were permissible if the ultimate goal was to preserve and promote the purity of human heredity. Later on, this message gave further confidence to the Nazi Party’s pursuit of racial cleansing. However, up until the Great Depression and the Nazi takeover in 1933, eugenics in Germany focused on education and social reform.

The Great Depression hit Germany hard. Immediately, the debate intensified as to how to decrease the burden of unproductive individuals on the financial resources of the state. Legislatively, distribution of aid was restructured to support productive or desirable individuals, to the detriment of those viewed as burdens or unproductive citizens. Those deemed feeble-minded, such as individuals with schizophrenia and manic-depressive disorder; those born with disorders such as Down syndrome; and criminals were immediately reduced to near-starvation levels of support. Some have suggested that this devaluing of human life set the stage for the eugenics policies and actions of the Third Reich.

Nowhere was hereditary degeneration and racial impurity more feared or eugenics more accepted and acted upon than in Nazi Germany. By the time that Adolf Hitler rose to power in 1933, the Nazi drive to create a healthy German people, brimming with fit workers, farmers, and soldiers for the Third Reich, had come to a fever pitch. Many medical professionals who firmly believed in the contributions of eugenics to the good of the German people were forced to align themselves with the rabid anti-Semitism of the Nazis. It was with their help that the regime’s 1933 compulsory sterilization law came into being. This law directed compulsory sterilization of those diagnosed with schizophrenia, manic-depressive disorder, many genetic disorders, and chronic alcoholism. By 1945, some 400,000 Germans had been forcibly sterilized.

Support for these efforts required a massive public education program consisting of posters, school textbooks, and documentaries portraying the sterilizations as a humane means to protect the nation from boundless evils. This type of public campaign was used not only to extol the virtues of eugenics programs but also to encourage “good” Germans to produce many more “good” Germans. One of most notorious of these efforts was the Lebensborn project. This project originally encouraged men of the Nazi Schutzstaffel, the SS (which translates as “protection squad”), to produce children with as many willing German women as possible. Medals were handed out to those women who produced four or more children.

On a more ominous note, the Nazi regime first discussed involuntary euthanasia in 1933, but it was not put into place until the commencement of World War II. Hitler believed that the upheaval of the war would diminish public disapproval, and he was apparently correct. It was at this time that Nazi racial hygiene turned from controlling reproduction to “mercy killing” those individuals regarded as genetically inferior. This population of individuals included a wide range of conditions, such as Down syndrome, other birth defects, and elderly psychiatric patients. By the end of the war, nearly 200,000 Germans had been massacred, using such methods as starvation, lethal injection of morphine, and asphyxiation by gas, administered by physicians and nurses. These experiences led the way to the eventual murder of millions of Jews in the concentration camps.

The worldwide backlash against eugenics following World War II drastically diminished public support for the movement. As the horrors of the eugenics policies of the Nazis became known, the name disappeared from the titles of journals and university courses, albeit slowly. For example, one of the authors (Meaney), during his undergraduate training in the early 1960s, enrolled in a course titled “Heredity and Eugenics.”A physician taught the course, and the content was basically what was then known about the genetics of human diseases. Despite these holdovers from the early days, the movement was essentially dead as genetics moved into its next phase.

1953-Present: Molecular Genetics

During this time period, genetics has probably changed the most and experienced its greatest acceleration in terms of discoveries and applications. It started with the discovery of the structure of DNA in 1953.

In the aftermath of World War II and the work of laboratory scientists during the 1930s and 1940s, we were on the brink of understanding the structure of DNA. Following the determination by Oswald and Avery that DNA was likely the genetic material, the race was on to understand this deceptively simple mix of nucleic acids. A group of scientists in the Cavendish Laboratory, at Cambridge University in England, worked diligently to understand how DNA was structured. A sense of academic camaraderie and youthful idealism combined with outright arrogance pushed the work ahead.

One of the young scientists in Cambridge was Rosalind Franklin, who worked in the X-ray diffraction laboratory. Since her arrival at King’s College in Cambridge, she had already demonstrated a critical error in the work of her predecessors. She discovered that there are two forms of DNA in most sample preparations. There is an “A form,” which is more crystalline, and a wetter “B form,” more likely closer to that found in cells. A characteristic X-pattern on the X-ray diffraction screen showed her immediately that the B form was helical in shape.

At this same time, James Watson and Francis Crick, also at Cambridge, began a collaboration to model the structure of DNA. They attempted to solve the structure of DNA as one might put together a puzzle, matching likely pieces in an attempt to produce a coherent picture. In November 1951, Franklin presented the information about the two forms of DNA. Watson was among those who listened. Intrigued by her results, Franklin was soon invited to see the model that Watson and Crick had constructed so far. A brief meeting was held. Apparently, preoccupied with her work, Franklin was quick to discount the model and left as soon as possible. The reaction by their superiors was quick, and Watson and Crick were ordered to abandon their model. But the story did not end here.

The importance of Franklin’s work, in particular the X-pattern on the diffraction screen suggesting the helical structure of DNA, was not lost on Watson and Crick. Forbidden to build models, the structure of DNA nonetheless was never far from their thoughts. Franklin had provided the “Eureka” moment, without her knowledge, when her data were presented. Maurice Wilkins, who worked with Franklin in the X-ray diffraction laboratory, also maintained an interest in the structure of DNA. On numerous occasions, he discussed the subject with his friend Crick. Wilkins was able to obtain one of Franklin’s photos of DNA, Photo 51, which was an unusually sharp image of the B form of DNA. It made its way to the Cavendish Laboratory, where Watson and Crick perceived themselves to be in the last leg of the DNA race with Linus Pauling. While Pauling had deduced a flawed structure, Watson and Crick knew that with a good X-ray image, Pauling would beat them. Perhaps even more important than the confirmation that the substance was helical, Wilkins had calculated that each turn of the helix contained 10 units per turn, with a spacing of 34 angstroms. Knowing the geometry, it remained to decide how the bases were stacked in the working model.

To beat Pauling to the secret of life, the group still had to publish. The scientists were up against a serious problem. They had no data. Without experimental evidence, they had a nice model but nothing to back it up. A solution was brokered. They would submit three interrelated papers to Nature. As it turned out, the three articles were published in a single issue of Nature in 1953. The one by Watson and Crick was first, then Wilkins, and finally Franklin and Raymond Gossling, a graduate student who had worked for Franklin. Franklin’s work was presented as a confirmation of the model, not as a primary factor in its determination. Within her own previously written paper, she inserted a confirmation of the model, stating that the ideas of Franklin and Gossling were consistent with Watson and Crick’s proposed model. Watson and Crick, who acknowledged that their work had been stimulated by the general knowledge of Franklin’s research, also referenced Franklin’s work.

Adding further significance to the discovery of the structure was research performed by Al Hershey and Martha Chase. Their work finally confirmed that DNA was the heritable substance, an idea still highly contentious even after Avery and Oswald. They were able to show viral DNA was still infective without its protein coats. It was still widely believed that DNA was too simple to be the genetic material and that the best candidates were the more complex proteins.

It almost goes without saying that the discovery of the structure of DNA launched the careers of Watson and Crick. There were still many questions that needed answering, such as how DNA replicated and how were proteins formed from DNA. But these watershed discoveries in the early 1950s were to forever change the landscape of genetics.

In retrospect, Crick’s now famous announcement at a local pub that he and Watson had discovered the secret of life might have been a tad brash. Far from unlocking the mechanism of heredity, they had at this point been able only to describe the appearance of the key. This was a major step, to be sure, but only a step. There remained the question of just how it worked. How does DNA cause the formation of proteins, and how do these proteins assemble into an egg, a hand, a turnip, or an elephant? Key to this question was deciding if the geometry of the DNA molecule directly catalyzed protein assembly or if it carried the directions for other organelles to complete the assembly. Looking at the “recipe,” it was obvious that a two-letter code would provide insufficient combinations to produce the required 20 amino acids, and a three-letter code provided an excess of possibilities. There were two solutions published for the mechanism, and both were wrong. Cosmologist George Gamow proposed his “sequence of diamonds” theory, based on overlapping triplets that were responsible for the direct assembly occurring on the surface of the helix. Elegant, as it allowed for the coding of exactly 20 amino acids, it was discounted by Crick because it would not allow for the complex protein sequences that Fredrick Sanger had been describing as he progressed toward the structure of the bovine insulin molecule. Crick preferred the “sequence hypothesis,” in which the DNA coded for the remote assembly of proteins. While his hypothesis was correct, his code was flawed. He proposed a code wherein only one variation on a given three-letter codon would code for a single amino acid while other sequences of the same three letters were noncoding, allowing for exact start points. This model also gave precisely 20 amino acids. As perfectly simple and logical as this seemed, it has subsequently been shown that each variation of a triplet has a specific meaning, with several codes being possible for a given amino acid, and allowing for stop codons.

It would not be until the early 1960s that the mechanism for communicating DNA’s message through the cell, messenger RNA (mRNA), would become known, providing a better understanding of the code. Ribosomes had already been identified in the 1950s as the site of protein synthesis, and scientists had synthesized RNA. Crick in 1958 described the central dogma of molecular genetics that information flows from DNA to RNA to protein. In 1961, he and Sydney Brenner conclusively demonstrated that the genetic code is a triplet code. Later in the same year, Brenner reported with François Jacob and Matthew Meselson that mRNA is the molecular structure that carries the message from the nuclear DNA to the cytoplasm, where protein synthesis occurs. By 1965, the codons for all of the 20 amino acids that comprise proteins had been deciphered.

Molecular genetics was not the only area of genetics in which great strides were made during the 1950s and 1960s. Despite the aftermath of World War II and what had occurred in the name of eugenics, new technological breakthroughs in genetics were increasingly applied in human genetics and especially in the study of human diseases. It was not until 1956 that the correct number of chromosomes in a normal human cell was established to be 46. By 1960, methods had been developed to culture blood lymphocytes so that they could be fixed on slides and stained for viewing under the microscope for any abnormalities in chromosome number. These discoveries spawned research into many syndromes, which had been described clinically in earlier years, to investigate the cells of individuals with these conditions to see if there were abnormalities of the chromosomes. Many of the common chromosomal abnormalities, such as trisomy 21 in Down syndrome, were reported during the late 1950s and early 1960s. Cytogenetic techniques were also applied to the study of cancer. In 1960, a specific chromosome known as the “Philadelphia chromosome” was reported to be associated with chronic myeloid leukemia. Later in the 1960s, it was discovered that amniotic fluid could be cultured and the chromosomes in these cells viewed for abnormalities, paving the way for the development of prenatal genetics. Finally, in 1970, it was reported that human chromosomes could be stained through the use of DNA-binding fluorescent dyes to produce unique banding patterns. Thus, more than size and shape could now distinguish human chromosomes. All of these discoveries, in turn, led to the need for more laboratories to do cytogenetic testing and the trained professionals to direct them.

By the late 1950s, grant support was available for the training of physicians to oversee the clinical and laboratory evaluation of patients and the counseling of families. Prior to the developments in human cytogenetics, PhD geneticists provided some genetic counseling, almost exclusively in university-based settings. The training of physicians began a shift toward counseling being provided as part of clinics in which patients were seen and diagnosed, and counseling about the genetic disorder and recurrence risks was provided for their families. From their origin in the 1950s and 1960s, genetic services have increasingly broadened to include genetic evaluation and testing at all phases of the human life cycle, including prenatal genetics, newborn screening, testing for adult-onset diseases such as cancer, and more.

The 1970s began with much controversy. This stemmed from the publication in 1969 by Arthur Jensen, an educational psychologist at the University of California at Berkeley, of a paper in which he presented data suggesting that racial differences in intelligence, as measured by IQ tests, were primarily due to genetic differences between the populations. Jensen based his argument on data showing that the heritability of IQ in White European populations for which IQ tests were available (i.e., the proportion of variation in IQ scores that is due to genetic variation) was about 80%. Jensen asserted that if heritability of IQ is 80%, this also extended to the difference in average IQ between Blacks and Whites and therefore most of the difference was due to genetic differences between the populations.

Jensen’s work instigated an avalanche of criticism from geneticists, led by the population geneticist, Richard Lewontin. Lewontin published a series of papers in which he explained the concept of heritability and how a within-population estimate of heritability like Jensen used did not explain differences between population, which are due to a combination of both genetic and environmental factors. He went on to investigate whether variation in genetic traits like the human blood groups and proteins is mostly among racial populations or within them. Lewontin’s analyses showed clearly that 85% of the variation for these genetic traits is within and not between populations. Molecular geneticists in the 1990s used DNA markers to replicate Lewontin’s research, and the results were strikingly similar. Therefore, the vast majority of genetic variation overall is within rather than among racial or ethnic populations.

Molecular genetics continued its rapid development during the 1970s. The first restriction endonuclease, an enzyme found in bacteria that can be altered to cut DNA of other organisms at specific recognition sites in the DNA, was isolated. This was a major technological advance in molecular genetics. David Baltimore and Howard Temin made important discoveries for both molecular and cancer genetics in 1970 through their work on RNA-dependent DNA polymerase or, as they called it, “reverse transcriptase,” an enzyme found in retroviruses that can be used to make DNA from an RNA template (the reverse of the usual transcription of DNA to RNA), and the report of the first oncogene, a gene that is able to change normal cells into cancerous ones. By 1973, the first recombinant DNA (rDNA) molecule, a DNA molecule that is a hybrid of fused sections of DNA from different species, had been reported by Stanley Cohen, Herbert Boyer, and their colleagues. Their publication now is regarded as a marker for the origins of recombinant DNA technology. This new technological advance prompted an international conference in 1975 to establish guidelines for the regulation of its use, due to concerns about the possible contamination of laboratory personnel, the public, and the environment through inadequate safeguards for biological containment.

Many other technological advances that impacted molecular genetics for years to come were made in the 1970s. DNA electrophoresis, a method used to separate DNA molecules, usually in a gel, by their electrical charge, shape, and size, was improved. The Southern (Edward M. Southern) blot, a method by which single strands of DNA are separated in a gel and transferred to a special filter in which radioactive probes are placed to identify specific DNA segments, was developed. Also, the first complementary DNA (cDNA) cloning was demonstrated. This depended on the method of reverse transcriptase and is a method of making multiple replicas of segments of DNA through reverse transcription, that is, using mRNA to make the DNA segments. By the late 1970s, several laboratories were developing methods for sequencing DNA. Frederick Sanger and his colleagues reported in 1977 a method of DNA sequencing that had a major impact on the research leading to efforts such as the Human Genome Project. Sanger and his group used the technique in a bacteriophage to produce the first fully sequenced genome. In the same year Boyer and Robert Swanson founded the first biotechnology company, Genentech. Genentech innovated the use of recombinant DNA technology to establish many pharmaceuticals of use in the treatment of human diseases, including Somatostatin, the first human protein produced through biotechnology. In 1978, scientists at Genentech cloned the human insulin gene, paving the way for the substance to be produced through rDNA technology. This happened in 1982, changing the treatment of diabetes from the use of nonhuman insulin to treatment using the biotechnology-derived insulin. One could see already that the impact of molecular genetics on medicine was going to be immense.

During the 1980s and 1990s and into the new millennium, genetics has continued to develop at an increasingly accelerated pace. The 1980s was a period of tremendous technological advances in molecular genetics and also in the application of this technology across all areas of genetics. Recombinant DNA technology continued to develop and be applied in such diverse pursuits as the improvement of industrial microbes, genetic engineering of plants, and the diagnosis of human diseases. Biotechnology companies were having major successes in the cloning of genes, which provided a stimulus for investments from the private sector. The DNA-sequencing technology that originated in the 1970s was advanced significantly through improvements in instrumentation to increase sequencing capacity. The United States and other governments worked to increase fiscal support for these efforts, with an eye toward a future that was to include the Human Genome Project and sequencing of diverse animal and plant genomes.

Many other technological innovations had their origins in the 1980s. Polymerase chain reaction (PCR), a method used to amplify specific DNA sequences rapidly from small amounts of double-stranded DNA, was developed by Kary Mullis and his colleagues. Today, PCR is not only used in genetics, but in all of the biological sciences and medicine. Its application made possible the rapid development of DNA markers for multiple uses and many diagnostic methods for human diseases. The development of restriction fragment length polymorphisms (RFLPs), varying lengths of DNA fragments resulting from the recognition by restriction enzymes of differences in the base pair sequence (mutations), and the application of this methodology, was another major breakthrough. David Botstein and his coworkers had proposed in 1980 the means of mapping the full human genome based on this technology, and it was soon being used as a marker or indicator of the chromosome location of specific genetic diseases. The first genetic disease to be mapped in this manner was Huntington’s disease in 1984, when it was reported to be located on Chromosome 4. Instances of disease genes so identified rapidly multiplied. In 1987, the yeast artificial chromosome (YAC) technology, the use of synthesized chromosomes to make gene libraries and transfer genes among species, was reported. This method, with other advances, allowed scientists to construct libraries of genomic DNA, a resource of significant application for any of the emerging genome projects. Another technological innovation of the 1980s was transgenic technology in both plants and animals. This technology allowed scientists to produce organisms into which genes from other species had been transferred and incorporated into the germ line of the new organism. Its use has been in transferring human disease genes into organisms such as mice (transgenic mice) in order to study the effects in mice with the ultimate aim of developing effective treatments.

Although the innovations of molecular genetics probably garnered the most headlines during the 1980s, there were many substantive developments in the use of genetics knowledge. In the United States, a federal bill and appropriation of funds led to the expansion of genetics services across the states, beginning in the mid-1970s, to ensure their availability in all sectors of the population. Although there were significant cutbacks in these funds during the early 1980s, clinical and laboratory services for genetic diseases continued to expand, as new genetic tests became available and other sources of support were tapped. The 1980s saw the first real attention paid to the quality of these services through the formation of public-health-funded, multistate networks to ensure that both laboratory and clinical services met a peer-determined standard of quality. Relevant to anthropology, there were public-health-funded projects starting in the late 1980s and continuing into the 1990s to improve the cultural competence of the delivery of genetic services. Finally, the 1980s produced an expansion of genetics support groups for individuals with genetic diseases and their families and a rapid expansion in the availability of genetics information, which was only the bare beginning of what was to come in the 1990s.

The Human Genome Project (HGP) was by far the most significant accomplishment in the 1990s. It began as a controversial proposal by the U.S. Department of Energy. Skepticism was deeply rooted. The project was initially viewed as technologically impossible and was likely to consume vast amounts of dollars to implement. The strong support from the National Academy of Sciences and U.S. Congress made it possible for the HGP to be initiated in the United States in 1990 by the National Institutes of Health (NIH) and Department of Energy. During the early part of the decade, the HGP converged with similar efforts in other nations to become an international investment.

The HGP officially kicked off in October 1990, with a budget of $60 million. Its primary goal was to determine the entire sequence of the 3 billion chemical bases in the DNA that comprises our genome, or, as some have put it, to uncover the “instruction manual” for Homo sapiens. James Watson was chosen to lead the project. He swiftly made a number of decisions concerning the goals of the HGP. One involved devoting 5% of the budget toward the study of ethical, legal, and social implications. Watson, among others, viewed this as extremely important considering the many issues concerning genetic discrimination, inadequate treatment options, and eugenics.

Watson, belabored by a series of conflicts and controversies for which his personality was probably ill-suited, stepped down from the directorship of the HGP in April 1992. Francis Collins, an accomplished and well-respected medical geneticist from the University of Michigan, was recruited to replace Watson. Collins provided the leadership for the HGP to reach its broad objectives, which included development of genetic and physical maps of the human genome and mapping and sequencing of simpler model organisms, such as bacteria, yeast, roundworm, and the fruit fly.

While Collins continued his work on the HGP, Craig Venter, a scientist who had been at the NIH during Watson’s tenure, was about to engage in DNA sequencing and potential gene patenting. After his resignation from the NIH in July 1992, Venter set out to create the world’s largest DNA sequencer, the TIGR. Venter, who headed a new company known as Celera Genomics, was applauded for his efforts to sequence the genome privately, because it would simply speed up the generation of the human sequence. At that point, only 4% of the genome sequence was complete. The U.S. House of Representatives Subcommittee on Energy and the Environment had a meeting to discuss the implications of Venter’s entry into the human-genome-sequencing activities. Both Venter and Collins addressed Congress concerning genome sequencing. In his testimony, Collins stressed the importance of meeting the standards that had been developed by the international community of human genome scientists. The race to unlock the human DNA continued. Collins and Venter eventually would merge to complete the HGP, and on June 26, 2000, they announced in the White House with President Bill Clinton that the rough draft of the HGP was complete.

During the 1990s and into the new millennium, numerous other developments occurred. In 1990, the first attempt at human gene therapy took place, involving a child with an immune system disorder. In 1994, the highly publicized book The Bell Curve, by Richard Herrnstein and Charles Murray, was published. The book discussed the relationship between IQ and ethnic groups and was a throwback to the controversies 25 years earlier as a result of Jensen’s article on race and IQ. It certainly produced a similar level of outcry by scientists and the public alike, mostly in response to the strong tones of genetic determinism and Social Darwinism. By 1994, genes that predispose individuals to disease were being identified, including the Ob gene, which predisposes individuals to obesity, and the breast cancer genes. In 1995, the sequence-tagged site (STS) gene-mapping technology was developed. This is a method by which short sections of cloned DNA (100-200 bases in length) are randomly selected and sequenced to mark the larger DNA sections in which they are found. It effectively speeded up the work of the HGP. A year later, a look at public interest in genetics took place through a national survey. It was found, not unsurprisingly, that the public viewed genetics with fear and mistrust. More fuel for public misgivings was loaded in 1997, when Scottish scientists cloned a sheep named “Dolly.” Barely a year later, reports by teams of researchers working with embryonic stem cells added to public concerns. The same year, the draft of the human genome map demonstrated the locations of more than 30,000 genes. The complete map of the human genome was published ahead of schedule, 3 years later.

Completion of the human genome sequence is a major milestone for biology, health, and society. However, the role of genetic information for disease prediction and prevention has been viewed with uncertainty. This uncertainty is expressed in one sense by the number of DNA variants that are apt to be linked to such diseases as cancer, cardiovascular disease, and other complex diseases. In addition, early detection is an issue because there is uncertainty about the risk conferred. The patterns of transmission for developing a certain illness are not always predictable and in the case of many common adult-onset diseases are dependent on environmental effects, including social, economic, and other environmental exposures. Information about susceptibility to all diseases is certain to improve and therefore people in the future will have the ability to take steps to reduce their risks.

Interventions such as ongoing medical assessments, lifestyle modifications, and diet or drug therapy will be offered to those at risk. For example, through a genetic test to identify people at risk for colon cancer, individuals receive regular screening of the colon that could help in preventing premature deaths. Another area of concern in this regard is the availability of drug therapy and interventions for genetic diagnosis for those who already have a major problem with access to health care. This may very well create another category of discrimination with respect to services for genetic diseases.

Genetics has entered the era of genomics and bioinformatics as a result of the sequencing work on a number of plant and animal genomes and the virtual explosion of databases and comparative information produced by these respective technologies. The convergence of molecular genetics with computer technology has produced a staggering amount of information for any single human being to comprehend. What we do know is that cracking the human and other genomes is just the beginning in our quest for a more complete understanding of genetics. The next challenges will be to understand how the genomes direct and coordinate the development of plants and animals, including humans, and how the environment is involved in developmental processes. Then, the ultimate challenge will be how to use the immense knowledge base of genetics to improve our lot, at the same time protecting the only planet we currently inhabit and its diverse biology.

References:

- Crow, J. F., & Dove, W. F. (Eds.). (2000). Perspectives on genetics: Anecdotal, historical, and critical commentaries, 1987-1998. Madison: University of Wisconsin Press.

- Davies, K. (2001). Cracking the genome: Inside the race to unlock human DNA. Baltimore: Johns Hopkins University Press.

- Harper, P. S. (Ed.). (2004). Landmarks in medical genetics: Classic papers with commentaries. New York: Oxford University Press.

- Henig, R. M. (2000). The monk in the garden: The lost and found genius of Gregor Mendel, the father of genetics. Boston: Houghton Mifflin.

- Kevles, D. J. (1995). In the name of eugenics. Cambridge, MA: Harvard University Press.

- Mange, E. J., & Mange, A. P. (1999). Basic human genetics (2nd ed.). Sunderland, MA: Sinauer.